Making online conferences more accessible

Accessibility has been a core focus of our conferences since the very beginning, with something like 20% of our very first conference way back in 2004 focussed specifically on the topic (sadly the site for that conference is lost to the sands of time).

And all these years later in 2021 we’ve come somewhat full circle with our brand new conference entirely dedicated to accessibility for our core audience of front end developers Access All Areas, that takes place later this month.

The accessibility of conferences

Now, at first glance, online conferences would appear to be inherently more accessible than in person events:

- They can take place wherever it might suit an attendee (no need for travel, no concern about the accessibility of a conference venue and its facilities)

- They’re on people’s devices, and so whatever assistive technology someone uses, they’ll be using that to access the content, making the content inherently more accessible.

So naturally they’ll be more accessible for disabled people.

Anyway.

At Web Directions we’ve been thinking a lot about (and working on) making our conferences as accessible as possible since we started planning to take our events online in early 2020.

Today I’d like to talk about some of the obvious, and less obvious, ways in which online content associated with conferences might be less accessible than is ideal, and how we went about addressing these.

Sound and vision

There are two ways in which information in a presentation is conveyed: sound (almost entirely the speaker delivering the presentation), and the visuals of their presentations. Let’s look at each of these in turn. Each presents very significant accessibility challenges for distinct groups of potential attendees.

Sound

The majority of the information in most presentations comes from the presenter speaking to the audience. So for folks who are deaf or hard of hearing this presents a significant challenge to accessibility.

But there’s an easy answer to that right? Captions! Well it’s not quite as easy an answer you might think.

Firstly–accurate real time captioning particularly of technical content, with terms of art, acronyms and so on is very challenging and expensive to provide. Unlike content that is frequently captioned in realtime, like news reports or sports commentary, technical presentations are typically much more information dense, have far higher reading level complexity, and are as mentioned full of jargon and acronyms likely unfamiliar except to experts.

We’ve found that human based captioning of technical presentations, even when not done in real time, requires very significant editing to ensure anything like reasonable accuracy (increasingly AI based captioning is approaching human accuracy, but still requires substantial editing). Every transcript we edit will typically take 3-5 times as long as the presentation itself to edit, and that’s after being transcribed.

And, while AI based real-time captioning is a possible solution for an event like a meetup, we felt it certainly wasn’t an acceptable solution for a professional conference.

So, we approach captioning in a slightly different way.

Pre-recording for the win

We had already decided to pre-record all our conference content.

Why? It allows for better quality presentations in a number of ways– speakers can do multiple takes, and do-over errors. We can edit to make a much more engaging presentation. We’re not reliant on presenters’ upload speed for their video quality, or sound quality–itself an accessibility issue as many folks find lower quality sound much much harder to comprehend than good quality audio). In short, pre-recording produces a vastly superior presentation to live streamed presentations in almost every case.

And because presentations are pre-recorded, this also allows us to carefully edit captions to ensure their accuracy.

We then livestream the finished edited video so attendees experience the events simultaneously (which helps facilitate a lively real time chat among attendees and with speakers right along side the presentations.)

We’ll leave for another day why we’ve built our own system for displaying captions, but in short, live streamed web based video captions are not delivered in the same was regular web based video captions are.

More than just accessibility benefits

Let’s also observe that as often is the case when addressing access for disabled people, we benefit other users as well. English not your first language? Use the captions (or our associated live transcript) to help better understand the speaker. In a noisy environment? (say watching on a large screen with a group of colleagues)–captions may make the presentation easier to follow. And because there’s an associated transcript, presentations are searchable, and can be indexed by search engines.

Visuals

The second essential component in a presentation are the associated visuals (OK, so once in well over 1,000 presentations since 2004 at one of our conferences a speaker spoke with no visuals…)

This is usually almost entirely overlooked in terms of its impact on accessibility. Whether pleasant accompanying visuals, sight jokes (meme GIFs are often used in this way), code, graphs, charts, speakers almost invariably assume the audience can see the screen (how many time have you heard “as you can see from the slide…”). And then there are screencasts (so much going on), and don’t even get me started on live coding sessions (as a complete aside unrelated to a11y I hate live coding sessions, maybe the subject for another day).

Screen readers or other assistive devices are of no use here–the visuals on a screen are essentially a binary blob, utterly inaccessible except to people’s eyes. Any text is not actually text a screen reader can read, just bits rendered as pixels.

Enter audio descriptions

So, to address this we initially considered audio descriptions. These are particularly used in television, cinema, and the theatre, where a narrator describes the action, facial expressions and other aspects important to understanding the work that aren’t conveyed in the dialog and soundtrack alone.

Descriptions are usually delivered in gaps between the dialog. And this might seem an ideal solution to our dilemma. But we consulted with audio description experts and came to the conclusion that it wasn’t the ideal solution–there is so little time left for the describer to use given how information-dense presentations typically are, that we felt the descriptions were likely to be more distracting than of value.

Then there’s the nature of a good deal of the content for our conferences–code. How do you audio describe ‘document.querySelectorAll("body > *")‘? An expert might describe it as “using querySelectorAll to find every element directly inside the body of an HTML document”. A literal description would be something like “document dot query selector all open brackets, open double quotation mark body greater than sign asterisk close double quotation marks close brackets”.

However, we definitely didn’t want to simply give up there. So we decided to do a couple of things to make slides more accessible to people with visual disabilities.

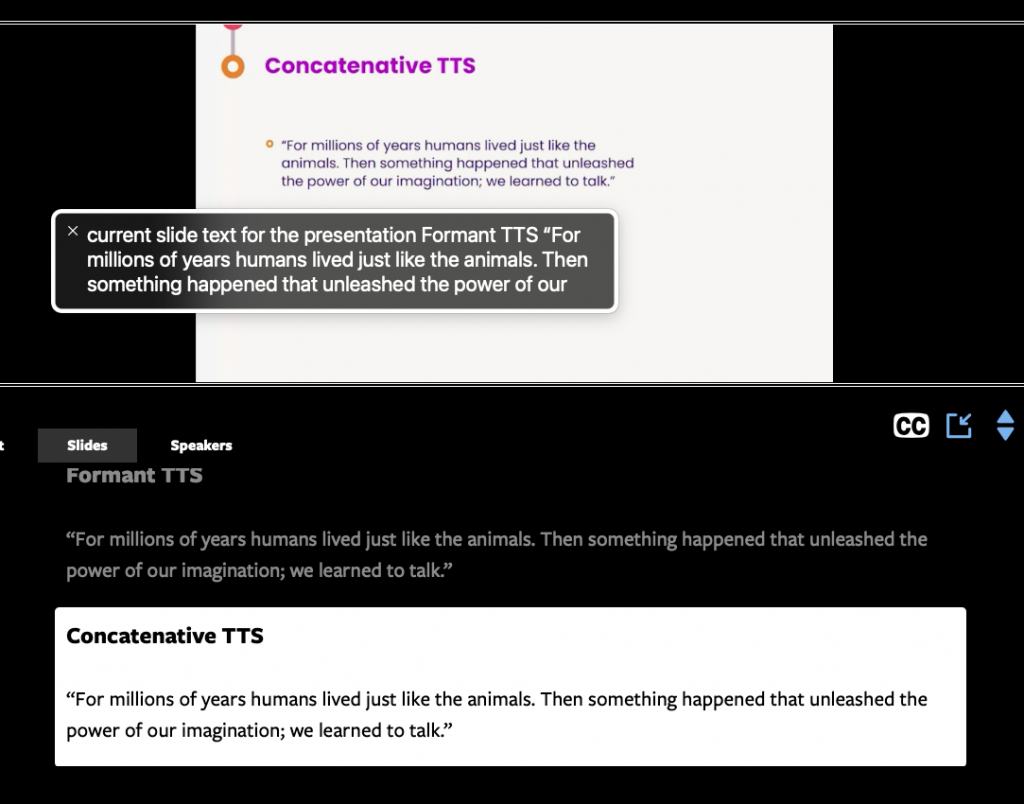

Firstly we extract all the text, including code examples from each slide, attempting as much as possible to capture the semantic structure in HTML–including heading levels, lists, ordered lists and so on.

In addition we provide text based descriptions similar to the sort of descriptions an audio describer would provide. Is a speaker using a background image of a unicorn animated gif flying over a rainbow? That gets described. A presentation includes a bar chart showing the increase in use of ARIA attributes over the last 5 years–we provide text based descriptions of that.

And because we’d built our own captioning system, we can synchronise these text based versions of the slides with their appearance in the presentation. All that remained now was to allow screenreader to read them.

ARIA Live Regions

Which is where a technology that will be covered during Access All Areas, ARIA Live Regions, comes in.

Live regions allow you to markup your content in a way that alerts assistive devices like screen readers to content that might change on the page. These technologies can then read those changes out when they occur (all under the control of the user, and their familiar screen reader interface).

Some ARIA roles, like ‘alert’ are automatically live regions, while to make other elements live regions you add the attribute aria-live with a value indicating the desired ‘politeness’ level of this live region (it can be ‘assertive‘, but this should be used only sparingly, typically you should, and in our case we use, aria-live='polite').

Why is this any better than audio descriptions by a human? Screenreader users are in far more control of whether, when and how the text of a slide is read out, including how quickly–essentially it makes whatever is on the screen in the presentation accessible via their screenreader, the tool they are most conformable with accessing web based content. If a screenreader user uses their screen reader to read out code, then any code examples we provide this way will be read in a way that’s familiar to them as well.

And there’s again the additional benefit to all users–sighted users can also choose to see these slides during the conference, synchronised to the presentation. Any code on the screen can be copied and pasted. Any links can be activated (we turn URLs into links, link to things like a speakers twitter handle and so on). Here’s another great example of how by providing a more accessible experience for disabled people, all users benefit.

Our online events are here to stay

This recent tweet by Karl Knights struck a chord with us.

From the moment we contemplated taking our events online, we decided that if we did, then that was going to be the main focus of our future. In the past we ran four or more in-person conferences a year–and while we aim to resume our major end of year Summit in 2022, our program of in depth focussed online conferences is and will remain the principle focus of what we do from now on. I’ll write more about how online conferences help address diversity and inclusion more broadly for both speakers and attendees in a later post, but done right, online conferences are far more inclusive, far more accessible (and not just to the disabled community).

Though that inclusion comes with effort, not simply by putting an event online.

If you run an online event, whether a meetup, or community event, or for that matter a huge commercial event, and would like some thoughts about how to do this as well as possible, just drop me a line. We’ve helped OZEWAI and Getaboutable deliver accessible online conferences, and learned a lot doing so.

Why return to an old normal that is less than what we’ve achieved during COVID?

A great fear I have is after the sacrifices and efforts of so many people during the Covid pandemic, we’ll rush to return to an old and lesser “normal” rather than learn lessons from how we as individuals, organisations and societies adapted (sometimes well, at other times poorly) to these challenges. How our communities often fail the most marginalised, disadvantaged and vulnerable, but COVID taught us it doesn’t have to be that way.

One lesson we’ve learned is online conferences deliver more inclusive, accessible experiences for everyone, and for us at least, they are here to stay.

In 2022 we have a whole series of events for Front End Developers

Across 2022 Web Directions is presenting our series of online conferences for front end designers and developers. Focussed deep dives, they go far beyond what you might expect from conference programs.

Priced individually from $195, or attend all 6, plus get access to our conference presentation platform Conffab for just $595, or $59 a month.

Great reading, every weekend.

We round up the best writing about the web and send it your way each Friday.